Installation and Upgrade

Requirements

Operating System

The following software prerequisites need to be verified. These are required mainly for Spectrum Protect server (TSM), but also check the latest IBM documentation.

SuSE Linux Enterprise (11 and up):

libstdc++33-3.3.3-11.9

libstdc++6-32bit-4.7.2_20130108-0.15.45

libstdc++46-4.6.9-0.11.38

libstdc++43-4.6.9-0.11.38

libstdc++33-32bit-3.3.3-11.9

libstdc++43-32bit-4.6.9-0.11.38

libstdc++46-32bit-4.6.9-0.11.38

libstdc++6-4.7.2_20130108-0.15.45

libaio-0.3.109-0.1.46

libaio-32bit-0.3.109-0.1.46

libopenssl0_9_8-0.9.8j-0.50.1

openssl-0.9.8j-0.50.1

libopenssl0_9_8-32bit-0.9.8j-0.50.1

openssl-certs-1.85-0.6.1

sharutils-4.6.3-3.19

ksh-93u-0.18.1

perl-CGI-4.XX

Red Hat Enterprise Linux (7 and up):

libstdc++-4.8.5-11.el7.x86_64

libaio-0.3.109-13.el7.x86_64

numactl-libs-2.0.9-6.el7_2.x86_64

numactl-2.0.9-6.el7_2.x86_64

openssl-1.0.2k-12.el7.x86_64

openssl-libs-1.0.2k-12.el7.x86_64

openssl098e-0.9.8e-29.el7.centos.3.x86_64

sharutils-4.13.3-8.el7.x86_64

ksh-20120801-26.el7.x86_64

perl-CGI-4.XX

Caution

If you want to deploy your Instances with spordconf, only RHEL8 or higher is supported.

Users

GSCC itself does not need a specific user to work. To configure a user for IBM Spectrum Protect, please consult the IBM documentation part for users.

Storage

Please ensure to have space available on the filesystems containing the following directories:

Directory |

Usage |

Space |

|---|---|---|

/opt/generalstorage/ |

Application files |

100 MB |

/var/gscc/ |

Logs and cluster communication |

5 GB (depending on configuration) |

/etc/gscc/ |

Configuration files |

100 MB |

Network and Firewall Access

Please make sure that each configured port for each the Spectrum Protect Servers and Clients as well as the ports for GSCC communication, as seen in Concepts

Disabling Docker default bridge network

If using SPORD with docker, the docker daemon will by default create a bridge network which could interfere with your network environment:

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:f2:af:c8:99 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

We do not need this bridge network for SPORD since we are using the host network for our containers. So, if neccessary, you can disable this network PRIOR to starting the docker daemon the first time by creating or editing /etc/docker/daemon.json and set this option:

{

"bridge": "none"

}

Make sure your json-Syntax is correct! An example /etc/docker/daemon.json file could look like this:

sles-02:~ # cat /etc/docker/daemon.json

{

"bridge": "none",

"log-level": "warn",

"log-driver": "json-file",

"log-opts": {

"max-size": "10m",

"max-file": "5"

}

}

Installing GSCC/SPORD

To install GSCC or SPORD, use one of the following commands:

RHEL/SLES:

rpm -ivh gscc-3.8.6.2-0.x86_64.rpm

rpm -ivh spord-3.8.6.0-0.x86_64.rpm

Ubuntu:

dpkg -i gscc_3.8.6.2-0_amd64.deb

dpkg -i spord_3.8.6.0-0_amd64.deb

Installation Scenarios

GSCC IP Cluster

- In this example, the following input is used:

Two Linux nodes with SLES 11SP3: lxnode1, lxnode2

Each Node has two additional internal disks besides OS disk: /dev/fioa, /dev/fiob

Two ISP instances are created: TSM1, TSM2

Step 1: LVM Configuration

For each ISP instance a separate volume group is created on each Linux Node. The advantage is that ISP instances are not sharing any disks. The main disadvantage is that both ISP instances cannot grow beyond the capacity of that disk. An alternative setup would be to create a common volume group for both ISP instances and share the disk. This would allow the instances to grow differently.

In this example the Fusion I/O Flash Card’s two partitions are dedicated to one instance.

First login to the Linux hosts as root. Create physical volumes, volume groups and logical volumes on both hosts. In this example all configurations regarding naming are the same on both host. The volume group name could be different on both hosts, if this is required. Be aware that if you plan to move the volume group to a SAN later, that even the logical volumes and filesystem mount points should be different on both hosts. In this IP only cluster this would make the configuration more complex.

Example LVM configuration:

lvmdiskscan

/dev/sda1 [ 2.01 GiB]

/dev/root [ 117.99 GiB]

/dev/fioa [ 1248.00 GiB]

/dev/fiob [ 1248.00 GiB]

4 disks

1 partition

0 LVM physical volume whole disks

0 LVM physical volumes

pvcreate /dev/fioa

pvcreate /dev/fiob

vgcreate TSM1 /dev/fioa

vgcreate TSM2 /dev/fiob

lvcreate -L 128G -n tsm1db1 TSM1

lvcreate -L 128G -n tsm1db2 TSM1

lvcreate -L 128G -n tsm1db3 TSM1

lvcreate -L 128G -n tsm1db4 TSM1

lvcreate -L 128G -n tsm1act TSM1

lvcreate -L 128G -n tsm1arc TSM1

lvcreate -L 8G -n tsm1cfg TSM1

lvcreate -L 128G -n tsm2db1 TSM2

lvcreate -L 128G -n tsm2db2 TSM2

lvcreate -L 128G -n tsm2db3 TSM2

lvcreate -L 128G -n tsm2db4 TSM2

lvcreate -L 128G -n tsm2act TSM2

lvcreate -L 128G -n tsm2arc TSM2

lvcreate -L 8G -n tsm2cfg TSM12

lxnode1:/ # vgs

VG #PV #LV #SN Attr VSize VFree

TSM1 1 7 0 wz--n- 1248.00g 0

TSM2 1 7 0 wz--n- 1248.00g 0

lxnode1:/ # lvs

LV VG Attr LSize Pool Origin Data% Move Log Copy% Convert

tsm1act TSM1 -wi-ao--- 128.00g

tsm1arc TSM1 -wi-ao--- 128.00g

tsm1cfg TSM1 -wi-ao--- 8.00g

tsm1db1 TSM1 -wi-ao--- 128.00g

tsm1db2 TSM1 -wi-ao--- 128.00g

tsm1db3 TSM1 -wi-ao--- 128.00g

tsm1db4 TSM1 -wi-ao--- 128.00g

tsm2act TSM1 -wi-ao--- 128.00g

tsm2arc TSM1 -wi-ao--- 128.00g

tsm2cfg TSM1 -wi-ao--- 8.00g

tsm2db1 TSM1 -wi-ao--- 128.00g

tsm2db2 TSM1 -wi-ao--- 128.00g

tsm2db3 TSM1 -wi-ao--- 128.00g

tsm2db4 TSM1 -wi-ao--- 128.00g

In SLES 11 it might be necessary to activate LVM at system start. The warning about “boot.device-mapper” can be ignored:

chkconfig --level 235 boot.lvm on

Step 2: Database filesystem

Also on both hosts the filesystems are configured including mount points and fstab entries.

The commands for creating filesystems could look like this:

mkfs.ext3 /dev/TSM1/tsm1db1

mkfs.ext3 /dev/TSM1/tsm1db2

mkfs.ext3 /dev/TSM1/tsm1db3

mkfs.ext3 /dev/TSM1/tsm1db4

mkfs.ext3 /dev/TSM1/tsm1act

mkfs.ext3 /dev/TSM1/tsm1arc

mkfs.ext3 /dev/TSM1/tsm1cfg

mkfs.ext3 /dev/TSM2/tsm2db1

mkfs.ext3 /dev/TSM2/tsm2db2

mkfs.ext3 /dev/TSM2/tsm2db3

mkfs.ext3 /dev/TSM2/tsm2db4

mkfs.ext3 /dev/TSM2/tsm2act

mkfs.ext3 /dev/TSM2/tsm2arc

mkfs.ext3 /dev/TSM2/tsm2cfg

tune2fs -c 0 -i 0 /dev/TSM1/tsm1db1

tune2fs -c 0 -i 0 /dev/TSM1/tsm1db2

tune2fs -c 0 -i 0 /dev/TSM1/tsm1db3

tune2fs -c 0 -i 0 /dev/TSM1/tsm1db4

tune2fs -c 0 -i 0 /dev/TSM1/tsm1act

tune2fs -c 0 -i 0 /dev/TSM1/tsm1arc

tune2fs -c 0 -i 0 /dev/TSM1/tsm1cfg

tune2fs -c 0 -i 0 /dev/TSM2/tsm2db1

tune2fs -c 0 -i 0 /dev/TSM2/tsm2db2

tune2fs -c 0 -i 0 /dev/TSM2/tsm2db3

tune2fs -c 0 -i 0 /dev/TSM2/tsm2db4

tune2fs -c 0 -i 0 /dev/TSM2/tsm2act

tune2fs -c 0 -i 0 /dev/TSM2/tsm2arc

tune2fs -c 0 -i 0 /dev/TSM2/tsm2cfg

mkdir -p /tsm/TSM1/db1

mkdir -p /tsm/TSM1/db2

mkdir -p /tsm/TSM1/db3

mkdir -p /tsm/TSM1/db4

mkdir -p /tsm/TSM1/act

mkdir -p /tsm/TSM1/arc

mkdir -p /tsm/TSM1/cfg

mkdir -p /tsm/TSM2/db1

mkdir -p /tsm/TSM2/db2

mkdir -p /tsm/TSM2/db3

mkdir -p /tsm/TSM2/db4

mkdir -p /tsm/TSM2/act

mkdir -p /tsm/TSM2/arc

mkdir -p /tsm/TSM2/cfg

mount /dev/TSM1/tsm1db1 /tsm/TSM1/db1

mount /dev/TSM1/tsm1db2 /tsm/TSM1/db2

mount /dev/TSM1/tsm1db3 /tsm/TSM1/db3

mount /dev/TSM1/tsm1db4 /tsm/TSM1/db4

mount /dev/TSM1/tsm1arc /tsm/TSM1/arc

mount /dev/TSM1/tsm1act /tsm/TSM1/act

mount /dev/TSM1/tsm1cfg /tsm/TSM1/cfg

mount /dev/TSM2/tsm2db1 /tsm/TSM2/db1

mount /dev/TSM2/tsm2db2 /tsm/TSM2/db2

mount /dev/TSM2/tsm2db3 /tsm/TSM2/db3

mount /dev/TSM2/tsm2db4 /tsm/TSM2/db4

mount /dev/TSM2/tsm2arc /tsm/TSM2/arc

mount /dev/TSM2/tsm2act /tsm/TSM2/act

mount /dev/TSM2/tsm2cfg /tsm/TSM2/cfg

cat /etc/fstab

/dev/mapper/TSM1-tsm1db1 /tsm/TSM1/db1 ext3 defaults 0 0

/dev/mapper/TSM1-tsm1db2 /tsm/TSM1/db2 ext3 defaults 0 0

/dev/mapper/TSM1-tsm1db3 /tsm/TSM1/db3 ext3 defaults 0 0

/dev/mapper/TSM1-tsm1db4 /tsm/TSM1/db4 ext3 defaults 0 0

/dev/mapper/TSM1-tsm1arc /tsm/TSM1/arc ext3 defaults 0 0

/dev/mapper/TSM1-tsm1act /tsm/TSM1/act ext3 defaults 0 0

/dev/mapper/TSM1-tsm1cfg /tsm/TSM1/cfg ext3 defaults 0 0

/dev/mapper/TSM2-tsm2db1 /tsm/TSM2/db1 ext3 defaults 0 0

/dev/mapper/TSM2-tsm2db2 /tsm/TSM2/db2 ext3 defaults 0 0

/dev/mapper/TSM2-tsm2db3 /tsm/TSM2/db3 ext3 defaults 0 0

/dev/mapper/TSM2-tsm2db4 /tsm/TSM2/db4 ext3 defaults 0 0

/dev/mapper/TSM2-tsm2arc /tsm/TSM2/arc ext3 defaults 0 0

/dev/mapper/TSM2-tsm2act /tsm/TSM2/act ext3 defaults 0 0

/dev/mapper/TSM2-tsm2cfg /tsm/TSM2/cfg ext3 defaults 0 0

Step 3: ISP Installation

Now TSM can be installed. This covers only the main step. Consult TSM documentation for detailed description of TSM installation. On both Linux hosts the TSM instance users and groups need to be created. The user names are the same as the TSM instance. The user and group ids are chosen to be the same as the TSM instances TCP ports:

groupadd -g 1601 tsm1

useradd -d /tsm/TSM1/cfg -g tsm1 -s /bin/bash -u 1601 tsm1

groupadd -g 1602 tsm2

useradd -d /tsm/TSM2/cfg -g tsm2 -s /bin/bash -u 1602 tsm2

These users need to be tuned in the Linux limits configuration file to allow more open files. (RHEL: /etc/security/limits.d/90-nproc.conf):

grep tsm /etc/security/limits.conf

tsm1 hard nofile 65536

tsm2 hard nofile 65536

Then the previously created filesystem’s ownership has to be changed:

cd /tsm

chown -R tsm1:tsm1 TSM1

chown -R tsm2:tsm2 TSM2

Now the latest ISP client will be installed:

rpm -ivh ./gskcrypt64-8.0.14.43.linux.x86_64.rpm

rpm -ivh ./gskssl64-8.0.14.43.linux.x86_64.rpm

rpm -ivh ./TIVsm-API64.x86_64.rpm

rpm -ivh ./TIVsm-BA.x86_64.rpm

The ISP server is installed using a previously response file in this case:

./install.sh -s -input TSMresponse.xml -acceptLicense

For the ISP database backup the following lines are added to the dsm.sys ISP client configuration file:

* TSM DB2

SERVERNAME TSMDBMGR_TSM1

TCPPORT 1601

TCPSERVERADDRESS 127.0.0.1

NODENAME $$_TSMDBMGR_$$

PASSWORDDIR /tsm/TSM1/cfg

ERRORLOGNAME /tsm/TSM1/cfg/tsmdbmgr.log

SERVERNAME TSMDBMGR_TSM2

TCPPORT 1602

TCPSERVERADDRESS 127.0.0.1

* PASSWORDACCESS generate

NODENAME $$_TSMDBMGR_$$

PASSWORDDIR /tsm/TSM2/cfg

ERRORLOGNAME /tsm/TSM2/cfg/tsmdbmgr.log

Be aware that with ISP/TSM 7 it is no longer necessary to save a password. Furthermore the API configuration is in a dedicated path. In this example a link is created to the standard dsm.sys file:

cd /opt/tivoli/tsm/server/bin/dbbkapi

ln -s /opt/tivoli/tsm/client/ba/bin/dsm.sys .

Step 4: ISP First Steps

In this step the DB2 database instances are created on both hosts. Most steps need to be performed for the primary and the standby DB2 instance. Only when ISP is started in foreground after the initial format in order to register users the steps are only needed for the primary instance:

/opt/tivoli/tsm/db2/instance/db2icrt -a server -s ese -u tsm1 tsm1

/opt/tivoli/tsm/db2/instance/db2icrt -a server -s ese -u tsm2 tsm2

The users’ profiles should look like this:

su - tsm1

cat .profile

export LANG=en_US

export LC_ALL=en_US

if [ -f /tsm/TSM1/cfg/sqllib/db2profile ]; then

. /tsm/TSM1/cfg/sqllib/db2profile

fi

The db2 user profile (tsm/TSM1/cfg/sqllib/userprofile) will contain this:

LD_LIBRARY_PATH=/opt/tivoli/tsm/server/bin/dbbkapi:/usr/local/ibm/gsk8_64/lib64:$LD_LIBRARY_PATH

export LD_LIBRARY_PATH

DSMI_CONFIG=/tsm/TSM1/cfg/tsmdbmgr.opt

DSMI_DIR=/opt/tivoli/tsm/server/bin/dbbkapi

DSMI_LOG=/tsm/TSM1/cfg

export DSMI_CONFIG DSMI_DIR DSMI_LOG

Before the ISP instance will be formatted these preparation tasks are performed. The dsmserv.opt file needs to be adjusted to customer requirements:

lxnode1:/tsm/TSM1/cfg # ln –s /opt/tivoli/tsm/server/bin/dsmserv dsmserv_TSM1

lxnode1:/tsm/TSM1/cfg # cat tsmdbmgr.opt

SERVERNAME TSMDBMGR_TSM1

lxnode1:/tsm/TSM1/cfg # cat dsmserv.opt

*

* TSM1 - dsmserv.opt

*

COMMmethod TCPIP

TCPPORT 1601

* SSLTCPPORT 1611

TCPADMINPORT 1621

SSLTCPADMINPORT 1631

ADMINONCLIENTPORT NO

TCPWINDOWSIZE 128

TCPBUFSIZE 64

TCPNODELAY YES

DIRECTIO NO

MAXSessions 200

COMMTimeout 3600

IDLETIMEOUT 240

RESOURCETIMEOUT 60

EXPInterval 0

EXPQuiet yes

TXNGroupmax 8192

MOVEBATCHSIZE 1000

MOVESizethresh 32768

DNSLOOKUP YES

LANGUAGE en_US

SEARCHMPQUEUE

REPORTRETRIEVE YES

ADMINCOMMTIMEOUT 3600

ADMINIDLETIMEOUT 600

AliasHalt stoptsm

SANDISCOVERY OFF

SANDISCOVERYTIMEOUT 30

* REORGBEGINTIME 14:30

* REORGDURATION 4

ALLOWREORGINDEX YES

ALLOWREORGTABLE YES

* DISABLEREORGTable

* DISABLEREORGIndex BF_AGGREGATED_BITFILES,BF_BITFILE_EXTENTS,BACKUP_OBJECTS,ARCHIVE_OBJECTS

* DISABLEREORGCleanupindex

* KEEPALIVE YES

DBDIAGLOGSIZE 128

BACKUPINITIATIONROOT OFF

VOLUMEHistory /tsm/TSM1/cfg/volhist.out

DEVCONFig /tsm/TSM1/cfg/devconf.out

As the ISP instance user set these parameters:

su – tsm1

db2start

db2 update dbm cfg using dftdbpath /tsm/TSM1/cfg

db2set -i tsm1 DB2CODEPAGE=819

Finally the ISP instance will be formatted. This step is also required on the planned standby instance for this ISP server:

su – tsm1

./dsmserv_TSM1 format dbdir=/tsm/TSM1/db1,/tsm/TSM1/db2,/tsm/TSM1/db3,/tsm/TSM1/db4 activelogsize=102400 activelogdir=/tsm/TSM1/act archlogdir=/tsm/TSM1/arc

Now the first configuration steps are done for ISP. This step is only needed for the primary database. The instance is started in foreground. Here also the GSCC user is created (gscc):

./dsmserv_TSM1

set servername tsm1

set serverpassword tsm1

reg lic file=/opt/tivoli/tsm/server/bin/tsmee.lic

reg admin admin admin passexp=0

grant auth admin class=system

reg admin gscc gscc passexp=0

grant auth gscc class=system

DEFINE DEVCLASS TSMDBB devt=file maxcap=10g dir=/tsm/TSM1/ISI2/sf/tsmdbb

set dbrecovery TSMDBB

stoptsm

After the ISP instance was stopped it can be restarted in the background. It should be verified if a database backup is working as expected before continuing with the next step.

/tsm/TSM1/cfg/dsmserv_TSM1 -i ${lockpath} –quiet >/dev/null 2>&1 &

Step 5: HADR Configuration

The TCP ports used for HADR are added to the /etc/services file:

echo DB2_tsm1_HADR 60100/tcp >> /etc/services

echo DB2_tsm2_HADR 60200/tcp >> /etc/services

The next task is to configure HADR on all databases. This is an example for TSM1 including both cluster sides.

This is on the primary side. After the configuration a database restart is required in order to activate the settings. Before the settings the initial db2 database backup is performed:

db2 connect to tsmdb1

db2 GRANT USAGE ON WORKLOAD SYSDEFAULTUSERWORKLOAD TO PUBLIC

db2 backup database TSMDB1 online to /tsm/TSM1/ISILON01/sf/db2backup COMPRESS include logs

db2 update db cfg for TSMDB1 using LOGINDEXBUILD yes

db2 update db cfg for TSMDB1 using HADR_LOCAL_HOST lxhost1

db2 update db cfg for TSMDB1 using HADR_LOCAL_SVC 60100

db2 update db cfg for TSMDB1 using HADR_REMOTE_HOST lxhost2

db2 update db cfg for TSMDB1 using HADR_REMOTE_SVC 60100

db2 update db cfg for TSMDB1 using HADR_REMOTE_INST tsm1

db2 update db cfg for TSMDB1 using HADR_SYNCMODE NEARSYNC

db2 update db cfg for TSMDB1 using HADR_PEER_WINDOW 300

On the standby database the configuration also needs to be done. First we restore from the db2 backup to sync the databases:

db2 restore db TSMDB1 from /tsm/TSM1/ISILON01/sf/db2backup on /tsm/TSM1/db1,/tsm/TSM1/db2,/tsm/TSM1/db3,/tsm/TSM1/db4 into TSMDB1 logtarget /tsm/TSM1/arc replace history file

When the restore has been successful, we can set HADR and finally activate the database:

db2start

db2 update db cfg for TSMDB1 using LOGINDEXBUILD yes

db2 update db cfg for TSMDB1 using HADR_LOCAL_HOST 53.51.130.153

db2 update db cfg for TSMDB1 using HADR_LOCAL_SVC 60100

db2 update db cfg for TSMDB1 using HADR_REMOTE_HOST 53.51.130.151

db2 update db cfg for TSMDB1 using HADR_REMOTE_SVC 60100

db2 update db cfg for TSMDB1 using HADR_REMOTE_INST tsm1

db2 update db cfg for TSMDB1 using HADR_SYNCMODE NEARSYNC

db2 update db cfg for TSMDB1 using HADR_PEER_WINDOW 300

db2start

db2 start hadr on db tsmdb1 as standby

On the primary side HADR is also activated. This is done while ISP is running:

db2 start hadr on db tsmdb1 as primary

db2pd –hadr –db tsmdb1

Database Member 0 -- Database TSMDB1 -- Active -- Up 1 days 01:01:40 -- Date 2015-03-23-01.13.43.718655

HADR_ROLE = PRIMARY

REPLAY_TYPE = PHYSICAL

HADR_SYNCMODE = NEARSYNC

STANDBY_ID = 1

LOG_STREAM_ID = 0

HADR_STATE = PEER

HADR_FLAGS =

PRIMARY_MEMBER_HOST = lxnode1

PRIMARY_INSTANCE = tsm1

PRIMARY_MEMBER = 0

STANDBY_MEMBER_HOST = lxnode2

STANDBY_INSTANCE = tsm1

STANDBY_MEMBER = 0

HADR_CONNECT_STATUS = CONNECTED

HADR_CONNECT_STATUS_TIME = 03/22/2015 00:32:04.317345 (1426980724)

HEARTBEAT_INTERVAL(seconds) = 30

HADR_TIMEOUT(seconds) = 120

TIME_SINCE_LAST_RECV(seconds) = 3

PEER_WAIT_LIMIT(seconds) = 0

LOG_HADR_WAIT_CUR(seconds) = 0.000

LOG_HADR_WAIT_RECENT_AVG(seconds) = 0.001156

LOG_HADR_WAIT_ACCUMULATED(seconds) = 333.423

LOG_HADR_WAIT_COUNT = 222865

SOCK_SEND_BUF_REQUESTED,ACTUAL(bytes) = 0, 16384

SOCK_RECV_BUF_REQUESTED,ACTUAL(bytes) = 0, 87380

PRIMARY_LOG_FILE,PAGE,POS = S0000041.LOG, 28504, 22057976497

STANDBY_LOG_FILE,PAGE,POS = S0000041.LOG, 28504, 22057976497

HADR_LOG_GAP(bytes) = 0

STANDBY_REPLAY_LOG_FILE,PAGE,POS = S0000041.LOG, 28504, 22057976497

STANDBY_RECV_REPLAY_GAP(bytes) = 0

PRIMARY_LOG_TIME = 03/23/2015 01:13:39.000000 (1427069619)

STANDBY_LOG_TIME = 03/23/2015 01:13:39.000000 (1427069619)

STANDBY_REPLAY_LOG_TIME = 03/23/2015 01:13:39.000000 (1427069619)

STANDBY_RECV_BUF_SIZE(pages) = 4096

STANDBY_RECV_BUF_PERCENT = 0

STANDBY_SPOOL_LIMIT(pages) = 3145728

STANDBY_SPOOL_PERCENT = 100

PEER_WINDOW(seconds) = 300

PEER_WINDOW_END = 03/23/2015 01:18:39.000000 (1427069919)

READS_ON_STANDBY_ENABLED = N

The following files should be transferred from the primary configuration directory to the standby side:

cd /tsm/TSM1/cfg

tar -cvf cert_tsm1.tar ./cert*

./cert.arm

./cert.crl

./cert.kdb

./cert.rdb

./cert.sth

./cert256.arm

tar -cvf cfg_tsm1.tar dsmserv.opt NODELOCK dsmkeydb.* volhist.out devconf.out dsmserv.dbid

dsmserv.opt

NODELOCK

dsmkeydb.sth

dsmkeydb.kdb

volhist.out

devconf.out

dsmserv.dbid

scp *.tar lxnode2:/tsm/TSM1/cfg

Password:

cert_tsm1.tar 100% 140KB 140.0KB/s 00:00

cfg_tsm1.tar 100% 90KB 90.0KB/s 00:00

On the other host these files are unpacked:

ssh lxnode2

cd /tsm/TSM1/cfg

tar -xvf cert_tsm1.tar

./cert.arm

./cert.crl

./cert.kdb

./cert.rdb

./cert.sth

./cert256.arm

tar -xvf cfg_tsm1.tar

NODELOCK

dsmkeydb.sth

dsmkeydb.kdb

volhist.out

devconf.out

dsmserv.dbid

Step 6: GSCC Installation

On both Linux hosts the software is installed first:

rpm -ihv gscc-3.8.0.409-0.x86_64.rpm

Set a link for ksh93:

ln –s /bin/ksh /bin/ksh93

ln -s /usr/bin/ksh /bin/ksh93att

The GSCC configuration files are placed in /etc/gscc. A typical configuration could look like this: The files will be created in the next steps. They have to be created on both Linux nodes. Be aware that the MEMBER.def files are not identical on the nodes as they contain information about the remote node. The GSCC member names are the same as the ISP instance names:

lax04:/etc/gscc # ls -la

total 84

drwxr-xr-x 4 root root 4096 Mar 22 00:04 .

drwxr-xr-x 109 root root 12288 Mar 19 07:23 ..

-rw------- 1 root root 13 Feb 21 17:04 .gscckey

-rw-r--r-- 1 root root 4005 Feb 18 16:59 README

-rw-r--r-- 1 root root 3671 Mar 21 23:44 TSM1.def

-rw-r--r-- 1 root root 296 Feb 21 16:41 TSM1.tsmlocalfs

-rw-r--r-- 1 root root 3674 Feb 23 12:37 TSM2.def

-rw-r--r-- 1 root root 296 Feb 23 12:38 TSM1.tsmlocalfs

-rw-r--r-- 1 root root 188 Feb 23 12:37 cl.def

-rw-r--r-- 1 root root 1461 Mar 21 23:37 gscc.conf

-rw-r--r-- 1 root root 10 Feb 18 17:00 gscc.version

-rw-r--r-- 1 root root 15 Feb 21 16:05 heartbeat

-rw-r--r-- 1 root root 10 Feb 21 16:03 localhost

-rw-r--r-- 1 root root 143 Mar 8 20:25 logrot.conf

-rw-r--r-- 1 root root 40 Feb 21 16:00 passweb

-rw-r--r-- 1 root root 40 Feb 21 16:00 alertscript

drwxr-xr-x 2 root root 4096 Feb 23 12:59 samples

drwxr-xr-x 2 root root 4096 Feb 21 00:04 ui

-rw-r--r-- 1 root root 215 Feb 21 17:07 web.def

The GSCC members are defined in cl.def. Be aware, that the second column is used for the ISP/TSM instance name, also referred as member team name (grouping all members together, which support the same ISP instance):

cat /etc/gscc/cl.def

#

# Cluster Object Definitions

#

# (C) General Storage, 2018

#

#

# <Member_Name> <Member_Team> <Member Description>

#

TSM1 TSM1 Production

TSM2 TSM2 Production

The central configuration file:

cat /etc/gscc/gscc.conf

# Activate Auto register

AutoRegister Yes

#Lifetime 1200

#Loclifetime 600

FileLogLevel Debug

#FileLogLevel Info

#FileLogLevel Notice

#FileLogLevel Warning

#FileLogLevel Error

Environment LC_ALL=C

Environment LANG=C

Environment StatusReadTimeout=300

Environment StatusFailureThreshold=5

Environment RemoteFailureThreshold=2

Environment TSMKillCounter=40

Environment ForceDuration=600

#Environment ForceDuration=0

Environment ErrCountMax=1

#Environment TSMAltPort=0

#Environment TSMAltPort=3940

Environment sshPath=/usr/bin/ssh

Environment hadrCOM=intern

Environment SSLPATH=/usr/bin

For each GSCC member a local filesystem configuration file is required (this is only needed for GSCCAD):

cat /etc/gscc/TSM1.tsmlocalfs

/dev/mapper/TSM-tsm1cfg /tsm/TSM1/cfg

/dev/mapper/TSM-tsm1db1 /tsm/TSM1/db1

/dev/mapper/TSM-tsm1db2 /tsm/TSM1/db2

/dev/mapper/TSM-tsm1db3 /tsm/TSM1/db3

/dev/mapper/TSM-tsm1db4 /tsm/TSM1/db4

/dev/mapper/TSM-tsm1arc /tsm/TSM1/arc

/dev/mapper/TSM-tsm1act /tsm/TSM1/act

Each member is configured with a definition file “MEMBER.def”. The file is required on both Linux nodes. In contrast to the previous configuration files, the files on the hosts have different entries for “peer_ip”. Otherwise they should be the same as in this example the Linux hosts are configured a like. There are two LACP interfaces in this example (bond0, bond1). The service ip is the virtual IP alias which is not configured on the physical interface. The value is the same for Primary and Standby. The only difference is the “peer_ip” which points to the remote node. The “home_system_id” is the preferred host for this member. There it needs to be the same.

You can get the value by using the “gshostid” tool:

lxnode1:/ #gshostid

lxnode1 33359782

lxnode1:/ #cat /etc/gscc/TSM1.def

#

# (C) General Storage

#

#

# TSM1.def

# this file is needed for each Cluster Member !

# default path: $HOME/.gscc/sample.def

#

# use ":" for any blank in var-values

#

# <var_name> <value> <discription>

expert_domain edUNIX_T6_HADR Expert Domain

am_tsm_server_path /tsm/TSM1/cfg Path to TSM Server

service_interface bond0,bond1 Comma separated TSM Server Network Interface(s)

service_ip_address 192.168.178.111,192.168.177.111 Comma separated TSM Server IP Addresse(s)

service_netmask 255.255.255.0,255.255.255.0 Comma separated TSM Server Netmask(s)

service_broadcast 192.168.178.255,192.168.177.255 Comma separated TSM Server Netmask(s)

log_file /var/gscc/logs/im.tsm1.log Activity Log File

am_log_file /var/gscc/logs/tsm1.log Action method log file

tsm_auth_string dsmadmc:-se=tsm1_local:SECURED TSM Authentication String

peer_tsm_auth_string dsmadmc:-se=tsm1:SECURED TSM Authentication String - remote removed

forces_command - Reset server verification key

home_system_id 33359782 System ID

member_type prod Member Type: prod | stby

suff_pings 1 Number of Clients that have to be reached

client_ip_address_file /etc/gscc/clipup File for Client IPs

heartbeat /etc/gscc/heartbeat Heartbeat IP Address

peer_ip 192.168.178.102 IP Address of Peer TSM Server

peer_port 3939 IP Port of Peer TSM Server

peer_member_name TSM1 Peer Member ID

local_member_name TSM1 Member ID

oserip_suff_pings 1 Pings to test against Shared Router

tsm_check_string dsmadmc:-se=tsm1_local:SECURED:q:status TSM Check String

otsm_check_string dsmadmc:-se=tsm1_remote:SECURED:q:status Other TSM Check String

tsm_startup_tolerance 600 Startup Time in Seconds for dsmserv

errorlistfile /var/gscc/logs/tsm1.errorlist.out Error List File

pagecommand /etc/gscc/alert Command to page operator

pageinterval 600 Time between paging the Operator on error

trigger auto How Operator triggers Failed status (key: auto)

dbb_rules hadr Full/incremental dbb interval and frequency

#dbb_rules extern Full/incremental dbb interval and frequency

tsm_fulldevc tsmdbb TSM device class for full database backups

tsm_incrdevc tsmdbb TSM device clss for incremental database backups

hadr_local_if bond0 HADR

hadr_local_ip 192.168.178.101 HADR

hadr_local_mask 255.255.255.0 HADR

hadr_local_cast 192.168.177.102 HADR

lxnode2:/ #cat /etc/gscc/TSM1.def

#

# (C) General Storage

#

#

# TSM1.def

# this file is needed for each Cluster Member !

# default path: $HOME/.gscc/sample.def

#

# use ":" for any blank in var-values

#

# <var_name> <value> <discription>

expert_domain edUNIX_T6_HADR Expert Domain

am_tsm_server_path /tsm/TSM1/cfg Path to TSM Server

service_interface bond0,bond1 Comma separated TSM Server Network Interface(s)

service_ip_address 192.168.178.111,192.168.177.111 Comma separated TSM Server IP Addresse(s)

service_netmask 255.255.255.0,255.255.255.0 Comma separated TSM Server Netmask(s)

service_broadcast 192.168.178.255,192.168.177.255 Comma separated TSM Server Netmask(s)

log_file /var/gscc/logs/im.tsm1.log Activity Log File

am_log_file /var/gscc/logs/tsm1.log Action method log file

tsm_auth_string dsmadmc:-se=tsm1_local:SECURED TSM Authentication String

peer_tsm_auth_string dsmadmc:-se=tsm1:SECURED TSM Authentication String - remote removed

forces_command - Reset server verification key

home_system_id 33359782 System ID

member_type stby Member Type: prod | stby

suff_pings 1 Number of Clients that have to be reached

client_ip_address_file /etc/gscc/clipup File for Client IPs

heartbeat /etc/gscc/heartbeat Heartbeat IP Address

peer_ip 192.168.178.101 IP Address of Peer TSM Server

peer_port 3939 IP Port of Peer TSM Server

peer_member_name TSM1 Peer Member ID

local_member_name TSM1 Member ID

oserip_suff_pings 1 Pings to test against Shared Router

tsm_check_string dsmadmc:-se=tsm1_local:SECURED:q:status TSM Check String

otsm_check_string dsmadmc:-se=tsm1_remote:SECURED:q:status Other TSM Check String

tsm_startup_tolerance 600 Startup Time in Seconds for dsmserv

errorlistfile /var/gscc/logs/tsm1.errorlist.out Error List File

pagecommand /etc/gscc/alert Command to page operator

pageinterval 600 Time between paging the Operator on error

trigger auto How Operator triggers Failed status (key: auto)

dbb_rules hadr Full/incremental dbb interval and frequency

#dbb_rules extern Full/incremental dbb interval and frequency

tsm_fulldevc tsmdbb TSM device class for full database backups

tsm_incrdevc tsmdbb TSM device clss for incremental database backups

hadr_local_if bond0 HADR

hadr_local_ip 192.168.178.101 HADR

hadr_local_mask 255.255.255.0 HADR

hadr_local_cast 192.168.177.102 HADR

The heartbeat file contains the remote IP addresses:

cat /etc/gscc/heartbeat

192.168.178.102

192.168.177.102

192.168.176.102

The clipup file contains usually also the heartbeat IP addresses and one more address for a router, gateway or other available IP address, which will be the quorum tiebreaker:

cat /etc/gscc/clipup

192.168.178.102

192.168.177.102

192.168.176.102

192.168.178.1

Also the log rotation can be configured now. You can add the log rotation later to the crontab:

cat /etc/gscc/logrot.conf

/var/gscc/logs/gscc.log 1000000 5

/var/gscc/logs/im.tsm1.log 200000 5

/var/gscc/logs/TSM1.sync 200000 5

/var/gscc/logs/im.tsm2.log 200000 5

/var/gscc/logs/TSM2.sync 200000 5

crontab –l|grep logrot

0 16 * * * /opt/generalstorage/gscc/bin/logrot >/var/gscc/logs/logrot.out 2>&1

echo ‘0 16 * * * /opt/generalstorage/gscc/bin/logrot >/var/gscc/logs/logrot.out 2>&1’ > /etc/cron.d/gscc_logrot.cron

Now add GSCC binaries to the PATH by creating /etc/profile.d/gscc.sh with this line and set the PATH afterwards:

cat /etc/profile.d/gscc.sh

PATH=${PATH}:/opt/generalstorage/gscc/bin

. /etc/profile.d/gscc.sh

GSCC should also be added to the services. This example is SLES11, so the System V init is used. For SLES12 or RHEL 7 see the system configuration below:

cd /etc/init.d

ln -s /opt/generalstorage/gscc/bin/rc.gscc gscc

chkconfig gscc 35

chkconfig --list gscc

gscc 0:off 1:off 2:off 3:on 4:off 5:on 6:off

Usage:

service gscc {start|stop|restart|status}

If you used GSCCAD with SSL access, this additional service is required:

ln -s /opt/generalstorage/gscc/bin/rc.gsuid gsuid

chkconfig gsuid 35

chkconfig --list gsuid

gsuid 0:off 1:off 2:off 3:on 4:off 5:on 6:off

In case SLES12 / RHEL 7 is installed, the following steps are required instead:

cp /opt/generalstorage/gscc/bin/gscc.service /etc/systemd/system

systemctl enable gscc.service

cp /opt/generalstorage/gscc/bin/gsuid.service /etc/systemd/system

systemctl enable gsuid.service

Set “Operator” as the start status for all members:

lxnode1:/ # setoper

INFO: Member TSM1 set to Operator at GSCC startup

INFO: Member TSM2 set to Operator at GSCC startup

The next step is to save the ISP server administrator password for GSCC:

lxnode1:/ # settsmadm

General Storage Cluster Controller for TSM - Version 3.8.0.1

TSM Admin Registration – SECURED

Please enter TSM user id: gscc

Please enter TSM password:

Please enter GSCC key:

Please enter GSCC key again:

INFO: key verification OK.

INFO: Encrypting and saving input.

INFO: Completed.

GSCC can be started now. This is SLES 11 again, see below for SLES12 and RHEL 7:

lxnodes1:/etc/gscc # service gscc start

Starting gscc daemons: done

If GSCCAD is used with SSL, the gsuid daemon needs to be started aswell:

lxnodes1:/etc/gscc # service gsuid start

Starting gsuid daemons: done

In SLES 12 and RHEL the processes are started like this:

lxnodes1:/etc/gscc # systemctl start gscc

lxnodes1:/etc/gscc # systemctl start gsuid

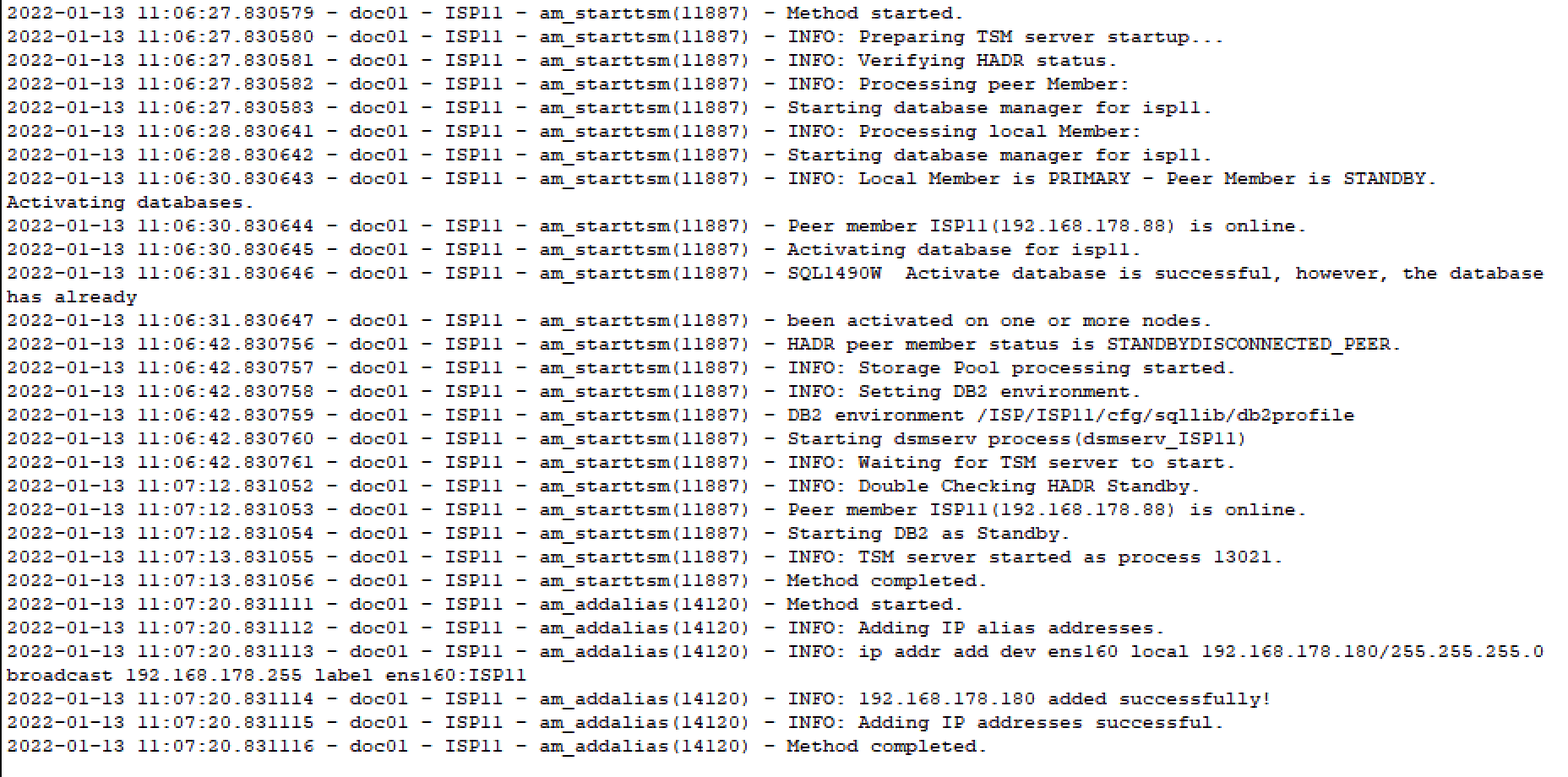

You can verify the startup status in /var/gscc/logs/gscc.log. If there were no obvious errors, GSCC can be verified by stopping and starting the TSM instances in “gsccadm”. This is an example how the output could look like:

gsccadm

General Storage Cluster Controller for TSM - Version 3.8.0.409

Command Line Administrative Interface

(C) Copyright General Storage 2015

Please enter user id: admin

Please enter password:

GSCC CLI-Version: 1988

gscc lxnode1>q status

150323080635 TSM1 Operator

150323080635 TSM2 Operator

gscc lxnode1>q tsm

TSM1 running_p_peer

TSM2 db2sysc_s_peer

gscc lxnode1>lxnode2:q tsm

lxnode2 returns:

TSM1 db2sysc_s_peer

TSM2 running_p_peer

SPORD & GSCC - IP Cluster

- In this example, we install SPORD and GSCC in the following environment:

Two Linux systems with SLES 12SP5: sles-01 (192.168.178.67) & sles-02 (192.168.178.68)

A single ISP Instance will be installed: ISP01

For each installation step, the headline will either say “Primary”, “Standby” or “Both”, meaning that those steps should be done either on the designated primary cluster system, the standby system, or both of them. In our case, sles-01 is the primary system and sles-02 is the standby system.

Step 1: Installing Prerequisites (Both)

Make sure that the systems are up to date, then install docker:

sles-02:~ # zypper in docker

Refreshing service 'Containers_Module_12_x86_64'.

Refreshing service 'SUSE_Linux_Enterprise_Server_12_SP5_x86_64'.

Refreshing service 'SUSE_Package_Hub_12_SP5_x86_64'.

Retrieving repository 'SLES12-SP5-Updates' metadata .................................................................................................................................................................................................................................[done]

Building repository 'SLES12-SP5-Updates' cache ......................................................................................................................................................................................................................................[done]

Loading repository data...

Reading installed packages...

Resolving package dependencies...

The following 8 NEW packages are going to be installed:

apparmor-parser catatonit containerd docker git-core libpcre2-8-0 perl-Error runc

The following recommended package was automatically selected:

git-core

8 new packages to install.

Overall download size: 50.8 MiB. Already cached: 0 B. After the operation, additional 250.8 MiB will be used.

Continue? [y/n/...? shows all options] (y): y

Retrieving package catatonit-0.1.3-1.3.1.x86_64 (1/8), 270.2 KiB (781.5 KiB unpacked)

Retrieving: catatonit-0.1.3-1.3.1.x86_64.rpm ........................................................................................................................................................................................................................................[done]

Retrieving package runc-1.0.2-16.14.1.x86_64 (2/8), 3.5 MiB ( 15.2 MiB unpacked)

Retrieving: runc-1.0.2-16.14.1.x86_64.rpm ...........................................................................................................................................................................................................................................[done]

Retrieving package containerd-1.4.11-16.45.1.x86_64 (3/8), 14.4 MiB ( 69.7 MiB unpacked)

Retrieving: containerd-1.4.11-16.45.1.x86_64.rpm ....................................................................................................................................................................................................................................[done]

Retrieving package perl-Error-0.17021-1.18.noarch (4/8), 28.3 KiB ( 49.8 KiB unpacked)

Retrieving: perl-Error-0.17021-1.18.noarch.rpm ......................................................................................................................................................................................................................................[done]

Retrieving package apparmor-parser-2.8.2-51.27.1.x86_64 (5/8), 296.4 KiB (836.6 KiB unpacked)

Retrieving: apparmor-parser-2.8.2-51.27.1.x86_64.rpm ................................................................................................................................................................................................................................[done]

Retrieving package libpcre2-8-0-10.34-1.3.1.x86_64 (6/8), 328.1 KiB (865.3 KiB unpacked)

Retrieving: libpcre2-8-0-10.34-1.3.1.x86_64.rpm .....................................................................................................................................................................................................................................[done]

Retrieving package git-core-2.26.2-27.49.3.x86_64 (7/8), 4.8 MiB ( 29.4 MiB unpacked)

Retrieving: git-core-2.26.2-27.49.3.x86_64.rpm ......................................................................................................................................................................................................................................[done]

Retrieving package docker-20.10.9_ce-98.72.1.x86_64 (8/8), 27.2 MiB (134.0 MiB unpacked)

Retrieving: docker-20.10.9_ce-98.72.1.x86_64.rpm ........................................................................................................................................................................................................................[done (3.7 MiB/s)]

Checking for file conflicts: ........................................................................................................................................................................................................................................................[done]

(1/8) Installing: catatonit-0.1.3-1.3.1.x86_64 ......................................................................................................................................................................................................................................[done]

(2/8) Installing: runc-1.0.2-16.14.1.x86_64 .........................................................................................................................................................................................................................................[done]

(3/8) Installing: containerd-1.4.11-16.45.1.x86_64 ..................................................................................................................................................................................................................................[done]

(4/8) Installing: perl-Error-0.17021-1.18.noarch ....................................................................................................................................................................................................................................[done]

(5/8) Installing: apparmor-parser-2.8.2-51.27.1.x86_64 ..............................................................................................................................................................................................................................[done]

Additional rpm output:

Created symlink from /etc/systemd/system/multi-user.target.wants/apparmor.service to /usr/lib/systemd/system/apparmor.service.

(6/8) Installing: libpcre2-8-0-10.34-1.3.1.x86_64 ...................................................................................................................................................................................................................................[done]

(7/8) Installing: git-core-2.26.2-27.49.3.x86_64 ....................................................................................................................................................................................................................................[done]

(8/8) Installing: docker-20.10.9_ce-98.72.1.x86_64 ..................................................................................................................................................................................................................................[done]

Additional rpm output:

Updating /etc/sysconfig/docker…

Caution

In the next step, we will be starting the docker daemon with default values. Per default, docker will create a bridge network, which, in some cases could interfere with your host network connection. If unsure, either configure the default bridge network to use values that are not interfering with your environment or by Disabling Docker default bridge network.

Load the docker daemon at boot and now:

sles-02:~ # systemctl enable docker --now

Created symlink from /etc/systemd/system/multi-user.target.wants/docker.service to /usr/lib/systemd/system/docker.service.

sles-02:~ # systemctl status docker

* docker.service - Docker Application Container Engine

Loaded: loaded (/usr/lib/systemd/system/docker.service; enabled; vendor preset: disabled)

Active: active (running) since Thu 2021-11-25 09:37:34 CET; 15s ago

Docs: http://docs.docker.com

Main PID: 25784 (dockerd)

Tasks: 16

Memory: 56.9M

CPU: 318ms

CGroup: /system.slice/docker.service

├─25784 /usr/bin/dockerd --add-runtime oci=/usr/sbin/docker-runc

└─25790 containerd --config /var/run/docker/containerd/containerd.toml --log-level warn

Nov 25 09:37:34 sles-02 systemd[1]: Starting Docker Application Container Engine...

Nov 25 09:37:34 sles-02 dockerd[25784]: time="2021-11-25T09:37:34+01:00" level=info msg="SUSE:secrets :: enabled"

Nov 25 09:37:34 sles-02 dockerd[25784]: time="2021-11-25T09:37:34.126474149+01:00" level=warning msg="failed to load plugin io.containerd.snapshotter.v1.devmapper" error="devma...t configured"

Nov 25 09:37:34 sles-02 dockerd[25784]: time="2021-11-25T09:37:34.127128802+01:00" level=warning msg="could not use snapshotter devmapper in metadata plugin" error="devmapper not configured"

Nov 25 09:37:34 sles-02 dockerd[25784]: time="2021-11-25T09:37:34.182582431+01:00" level=warning msg="Your kernel does not support swap memory limit"

Nov 25 09:37:34 sles-02 systemd[1]: Started Docker Application Container Engine.

Hint: Some lines were ellipsized, use -l to show in full.

Upload the SPORD package and the docker image file to the systems:

sles-02:/home/SPORD/ISP01 # ll /home/spordinstall/

total 9846844

-rw-r--r-- 1 root root 2906618639 Nov 24 12:25 SP819.100-GSCC-DSMISI.tar.gz

-rw-r--r-- 1 root root 28116 Nov 24 12:25 spord-3.8.5.4-0.x86_64.rpm

...

Install the SPORD package:

sles-01:/spordinstall # zypper in spord-3.8.5.4-0.x86_64.rpm

Refreshing service 'Containers_Module_12_x86_64'.

Refreshing service 'SUSE_Linux_Enterprise_Server_12_SP5_x86_64'.

Refreshing service 'SUSE_Package_Hub_12_SP5_x86_64'.

Loading repository data...

Reading installed packages...

Resolving package dependencies...

The following NEW package is going to be installed:

spord

The following package has no support information from it's vendor:

spord

1 new package to install.

Overall download size: 27.5 KiB. Already cached: 0 B. After the operation, additional 81.0 KiB will be used.

Continue? [y/n/...? shows all options] (y): y

Retrieving package spord-3.8.5.4-0.x86_64 (1/1), 27.5 KiB ( 81.0 KiB unpacked)

spord-3.8.5.4-0.x86_64.rpm:

Package is not signed!

spord-3.8.5.4-0.x86_64 (Plain RPM files cache): Signature verification failed [6-File is unsigned]

Abort, retry, ignore? [a/r/i] (a): i

Checking for file conflicts: .............................................................................................................................................................[done]

(1/1) Installing: spord-3.8.5.4-0.x86_64 .................................................................................................................................................[done]

]

sles-01:/spordinstall # rpm -qa|grep spord

spord-3.8.5.4-0.x86_64

(Optional) Add the spord binaries to your users’ environment:

sles-02:/home/SPORD/ISP01 # cd

sles-02:~ # vi .profile

...

sles-02:~ # cat .profile

export PATH=$PATH:/opt/generalstorage/spord/bin

Uncompress the docker image file and load it into the local docker image repository:

sles-02:/home/spordinstall # gzip -dc SP819.100-GSCC-DSMISI.tar.gz | tar -xvf -

SP819.100-GSCC-DSMISI

sles-02:/home/spordinstall # docker load -i SP819.100-GSCC-DSMISI

129b697f70e9: Loading layer [==================================================>] 205.1MB/205.1MB

042993f6cac4: Loading layer [==================================================>] 4.211GB/4.211GB

b8d624f1a74a: Loading layer [==================================================>] 2.735GB/2.735GB

59c5e7c3edeb: Loading layer [==================================================>] 20.55MB/20.55MB

Loaded image: dockercentos74:SP819.100-GSCC-DSMISI

Step 2: Configure SPORD (Both)

Create a SPORD configuration file:

vi /etc/spord/spord.conf:

#######################################################

# General Storage Software GmbH

# SPORD - Spectrum Protect On Resilient Docker

#

# spord.conf

#######################################################

SPORDNAME ISP01

SPORDHOME /home/SPORD/ISP01

DOCKERIMAGE dockercentos74:SP819.100-GSCC-DSMISI

ADDMAPPING /home/stgpools/ISP01,/home/SPORD/ISP01/etcgscc:/etc/gscc,/home/SPORD/ISP01/vargscc:/var/gscc

DKDFILE ISP01.dkd

ADDOPTION --pids-limit 6000

Create the container configuration file “ISP01.dkd”:

vi /home/SPORD/ISP01/ISP01.dkd:

# General Storage ISP Server Image for Docker Configuration File

user isp01

userid 1601

group isp01

groupid 1601

home /home/SPORD/ISP01/cfg

dbdir /home/SPORD/ISP01/db1,/home/SPORD/ISP01/db2,/home/SPORD/ISP01/db3,/home/SPORD/ISP01/db4

actlog /home/SPORD/ISP01/act

arclog /home/SPORD/ISP01/arc

actlogsize 2048

instdir /home/SPORD/ISP01/cfg

licdir /home/SPORD/ISP01

tcpport 1601

Add the user, group and directories as defined in the configuration files:

sles-01:/home/SPORD/ISP01 # groupadd -g 1601 isp01

sles-01:/home/SPORD/ISP01 # useradd -g 1601 -d /home/isp01 -m -u 1601 isp01

sles-01:/home/SPORD # su - isp01

isp01@sles-01:~> cd /home/SPORD/ISP01/

isp01@sles-01:/home/SPORD/ISP01> mkdir cfg db1 db2 db3 db4 act arc etcgscc vargscc

sles-01:~ # ll /home/SPORD/ISP01/

-rw-r--r-- 1 isp01 isp01 377 Nov 25 10:37 ISP01.dkd

drwxr-xr-x 3 isp01 isp01 22 Nov 25 11:31 act

drwxr-xr-x 3 isp01 isp01 19 Nov 25 11:31 arc

drwxr-xr-x 10 isp01 isp01 4096 Nov 26 16:29 cfg

drwxr-xr-x 3 isp01 isp01 19 Nov 25 11:31 db1

drwxr-xr-x 3 isp01 isp01 19 Nov 25 11:31 db2

-rw-r--r-- 1 root root 891 Nov 26 15:24 db2ese.lic

-rw-r--r-- 1 root root 914 Nov 26 09:49 db2ese.lic.11.1

-rw-r--r-- 1 root root 891 Nov 26 09:49 db2ese.lic.11.5

drwxr-xr-x 3 isp01 isp01 19 Nov 25 11:31 db3

drwxr-xr-x 3 isp01 isp01 19 Nov 25 11:31 db4

-rw-r--r-- 1 root root 14658 Nov 26 11:05 dsmreg.sl

drwxr-xr-x 4 root root 225 Nov 26 15:29 etcgscc

drwxr-xr-x 4 root root 71 Nov 26 15:29 vargscc

Caution

Upload your db2ese.lic AND db2reg.sl to the license file directory “licdir”. Else the ISP Server will not be licensed correctly, leaving you with a trial license. You get this files from your ISP installation package.

Step 3: Initial Container creation (Primary)

SPORD is now ready for the initial Container start. You can either preview the docker command if you want to review the configuration prior to starting or directly run the container by providing the -execute parameter to spordadm <Instance> create:

sles-01:/opt/generalstorage/spord/bin # spordadm ISP01 create

##################################################################

# General Storage SPORD - (c) 2022 General Storage Software GmbH #

##################################################################

INFO: Reading configuration file /etc/spord/spord.conf.

INFO: Receiving configuration for ISP01!

------------------------------

SPORDNAME: ISP01

SPORDBIN:

SPCONTBIN:

SPORDCONF: /etc/spord/spord.conf

SPORDHOME: /home/SPORD/ISP01

GSCCVERSION:

CUSTOMFILE:

DOCKIMG: dockercentos74:SP819.100-GSCC-DSMISI

ADDMAPPING: /home/stgpools/ISP01

MAINCOMMAND: create

ARGUMENTS:

------------------------------

INFO: Using docker image dockercentos74:SP819.100-GSCC-DSMISI!

CREATE dockercentos74:SP819.100-GSCC-DSMISI

DOCKER COMMAND(NEW): docker run -it --rm --privileged --net=host --name=ISP01 -v /etc/localtime:/etc/localtime -v /dev:/dev -v /home/SPORD/ISP01:/home/SPORD/ISP01 -v /opt/generalstorage/spord/bin:/opt/generalstorage/spord/bin -v /home/stgpools/ISP01:/home/stgpools/ISP01 dockercentos74:SP819.100-GSCC-DSMISI /opt/generalstorage/spord/bin/SPserver create /home/SPORD/ISP01/ISP01.dkd

If you did not specify -execute, as above, you can review if the output is correct. If everything is fine, copy the output from “DOCKER COMMAND(NEW)” and paste it to your terminal, then execute it:

sles-01:/opt/generalstorage/spord/bin # docker run -it --rm --privileged --net=host --name=ISP01 -v /etc/localtime:/etc/localtime -v /dev:/dev -v /home/SPORD/ISP01:/home/SPORD/ISP01 -v /opt/generalstorage/spord/bin:/opt/generalstorage/spord/bin -v /home/stgpools/ISP01:/home/stgpools/ISP01 dockercentos74:SP819.100-GSCC-DSMISI /opt/generalstorage/spord/bin/SPserver create /home/SPORD/ISP01/ISP01.dkd

SYSTEM PREPARATION

INFO: Starting SPdb2init: /opt/generalstorage/spord/bin/SPdb2init

DETECTING ENVIRONMENT

PROCESSING DKDFILE /home/SPORD/ISP01/ISP01.dkd..............

ISP User: isp01

ISP UserID: 1601

ISP Group: isp01

ISP GroupID: 1601

ISP User Home Directory: /home/SPORD/ISP01/cfg

DB Directory Location(s): /home/SPORD/ISP01/db1,/home/SPORD/ISP01/db2,/home/SPORD/ISP01/db3,/home/SPORD/ISP01/db4

Active Log Directory: /home/SPORD/ISP01/act

Archive Log Directory: /home/SPORD/ISP01/arc

Active Log Size: activelogsize=2048

ISP DB2 Instance Directory: /home/SPORD/ISP01/cfg

DB2 License Directory: /home/SPORD/ISP01

TCP Port: 1601

DB2 PREPARATION

CREATING GROUP..............

CREATING USER..............

useradd: warning: the home directory already exists.

Not copying any file from skel directory into it.

CREATING USER PROFILE..............

CREATING NEW INSTANCE (FORMAT)!

INFO: Checking Direcotories now...

WARNING: No dsmserv.opt found, creating base option file!

PREPARING dsmserv format command: /home/SPORD/ISP01/cfg/dsmserv.format

PREPARING DATABASE MANAGER..............

isp01

REMOVING DATABASE MANAGER BACKUP..............

BACKING UP DATABASE MANAGER..............

mv: cannot stat '/home/SPORD/ISP01/cfg/sqllib': No such file or directory

CREATING DATABASE INSTANCE..............

DBI1446I The db2icrt command is running.

DB2 installation is being initialized.

Total number of tasks to be performed: 4

Total estimated time for all tasks to be performed: 309 second(s)

Task #1 start

Description: Setting default global profile registry variables

Estimated time 1 second(s)

Task #1 end

Task #2 start

Description: Initializing instance list

Estimated time 5 second(s)

Task #2 end

Task #3 start

Description: Configuring DB2 instances

Estimated time 300 second(s)

Task #3 end

Task #4 start

Description: Updating global profile registry

Estimated time 3 second(s)

Task #4 end

The execution completed successfully.

For more information see the DB2 installation log at "/tmp/db2icrt.log.215".

DBI1070I Program db2icrt completed successfully.

DB20000I The UPDATE DATABASE MANAGER CONFIGURATION command completed

successfully.

SETTING DATABASE INSTANCE ENVIRONMENT..............

APPLYING DB2 LICENSE..............

LIC1402I License added successfully.

LIC1426I This product is now licensed for use as outlined in your License Agreement. USE OF THE PRODUCT CONSTITUTES ACCEPTANCE OF THE TERMS OF THE IBM LICENSE AGREEMENT, LOCATED IN THE FOLLOWING DIRECTORY: "/opt/tivoli/tsm/db2/license/en_US.iso88591"

PREPARING DB2 CLIENT FOR DBB..............

INFO: Environment prepared for /home/SPORD/ISP01/ISP01.dkd

INFO: Preparing DBB for SPserver

INFO: Formatting ISP01 from /home/SPORD/ISP01/ISP01.dkd

su -c "cd /home/SPORD/ISP01/cfg;dsmserv format dbdir=/home/SPORD/ISP01/db1,/home/SPORD/ISP01/db2,/home/SPORD/ISP01/db3,/home/SPORD/ISP01/db4 activelogsize=2048 activelogdirectory=/home/SPORD/ISP01/act archlogdirectory=/home/SPORD/ISP01/arc " - isp01

ANR7800I DSMSERV generated at 10:58:46 on Dec 20 2019.

IBM Spectrum Protect for Linux/x86_64

Version 8, Release 1, Level 9.100

Licensed Materials - Property of IBM

(C) Copyright IBM Corporation 1990, 2019.

All rights reserved.

U.S. Government Users Restricted Rights - Use, duplication or disclosure

restricted by GSA ADP Schedule Contract with IBM Corporation.

ANR7801I Subsystem process ID is 10736.

ANR0900I Processing options file /home/SPORD/ISP01/cfg/dsmserv.opt.

ANR7814I Using instance directory /home/SPORD/ISP01/cfg.

ANR3339I Default Label in key data base is TSM Server SelfSigned SHA Key.

ANR4726I The ICC support module has been loaded.

ANR0152I Database manager successfully started.

ANR2976I Offline DB backup for database TSMDB1 started.

ANR2974I Offline DB backup for database TSMDB1 completed successfully.

ANR0992I Server's database formatting complete.

ANR0369I Stopping the database manager because of a server shutdown.

You are now inside the newly created container and should start the ISP Instance and create an initial ISP system class administrator in order to continue:

SPORD@sles-01 # su - isp01

Last login: Thu Nov 25 11:31:10 CET 2021 on pts/2

SPORD-isp01@sles-01 #dsmserv

ANR7800I DSMSERV generated at 10:58:46 on Dec 20 2019.

IBM Spectrum Protect for Linux/x86_64

Version 8, Release 1, Level 9.100

Licensed Materials - Property of IBM

(C) Copyright IBM Corporation 1990, 2019.

All rights reserved.

U.S. Government Users Restricted Rights - Use, duplication or disclosure

restricted by GSA ADP Schedule Contract with IBM Corporation.

ANR7801I Subsystem process ID is 11193.

ANR0900I Processing options file /home/SPORD/ISP01/cfg/dsmserv.opt.

ANR7814I Using instance directory /home/SPORD/ISP01/cfg.

ANR3339I Default Label in key data base is TSM Server SelfSigned SHA Key.

ANR4726I The ICC support module has been loaded.

ANR0990I Server restart-recovery in progress.

ANR0152I Database manager successfully started.

ANR1628I The database manager is using port 51601 for server connections.

ANR2277W The server master encryption key was not found. A new master encryption key will be created.

ANR1636W The server machine GUID changed: old value (), new value (f6.06.95.ec.5a.17.ea.11.95.96.02.42.ac.11.00.02).

ANR2100I Activity log process has started.

ANR4726I The NAS-NDMP support module has been loaded.

ANR1794W IBM Spectrum Protect SAN discovery is disabled by options.

ANR2200I Storage pool BACKUPPOOL defined (device class DISK).

ANR2200I Storage pool ARCHIVEPOOL defined (device class DISK).

ANR2200I Storage pool SPACEMGPOOL defined (device class DISK).

ANR2803I License manager started.

ANR9639W Unable to load Shared License File dsmreg.sl.

ANR9652I An EVALUATION LICENSE for IBM Spectrum Protect for Data Retention will expire on 12/26/2021.

ANR9652I An EVALUATION LICENSE for IBM Spectrum Protect Basic Edition will expire on 12/26/2021.

ANR9652I An EVALUATION LICENSE for IBM Spectrum Protect Extended Edition will expire on 12/26/2021.

ANR2828I Server is licensed to support IBM Spectrum Protect for Data Retention.

ANR2828I Server is licensed to support IBM Spectrum Protect Basic Edition.

ANR2828I Server is licensed to support IBM Spectrum Protect Extended Edition.

ANR0984I Process 1 for AUDIT LICENSE started in the BACKGROUND at 11:37:57 AM.

ANR2820I Automatic license audit started as process 1.

ANR8598I Outbound SSL Services were loaded.

ANR8230I TCP/IP Version 6 driver ready for connection with clients on port 1601.

ANR8200I TCP/IP Version 4 driver ready for connection with clients on port 1601.

ANR9652I An EVALUATION LICENSE for IBM Spectrum Protect for Data Retention will expire on 12/26/2021.

ANR9652I An EVALUATION LICENSE for IBM Spectrum Protect Basic Edition will expire on 12/26/2021.

ANR9652I An EVALUATION LICENSE for IBM Spectrum Protect Extended Edition will expire on 12/26/2021.

ANR2560I Schedule manager started.

ANR2825I License audit process 1 completed successfully - 0 nodes audited.

ANR0985I Process 1 for AUDIT LICENSE running in the BACKGROUND completed with completion state SUCCESS at 11:37:58 AM.

ANR0281I Servermon successfully started during initialization, using process 11224.

ANR0098W This system does not meet the minimum memory requirements.

ANR2017I Administrator SERVER_CONSOLE issued command: SHOW TIME

ANR0993I Server initialization complete.

ANR2017I Administrator SERVER_CONSOLE issued command: SHOW TIME

IBM Spectrum Protect:SERVER1>

ANR0916I IBM Spectrum Protect distributed by International Business Machines is now ready for use.

ANR2017I Administrator SERVER_CONSOLE issued command: SHOW TIME

ANR2017I Administrator SERVER_CONSOLE issued command: INSTRUMENTATION END

ANR2017I Administrator SERVER_CONSOLE issued command: QUERY PROCESS

ANR0944E QUERY PROCESS: No active processes found.

ANR2017I Administrator SERVER_CONSOLE issued command: SHOW TIME

ANR2017I Administrator SERVER_CONSOLE issued command: SHOW TIME

ANR2017I Administrator SERVER_CONSOLE issued command: SHOW LOCKS ONLYW=Y

ANR2017I Administrator SERVER_CONSOLE issued command: SHOW TIME

ANR1434W No files have been identified for automatically storing device configuration information.

ANR4502W No files have been defined for automatically storing sequential volume history information.

ANR2017I Administrator SERVER_CONSOLE issued command: SHOW TIME

ANR2017I Administrator SERVER_CONSOLE issued command: SHOW DBCONN

ANR2017I Administrator SERVER_CONSOLE issued command: SHOW TIME

ANR2017I Administrator SERVER_CONSOLE issued command: SHOW TIME

ANR2017I Administrator SERVER_CONSOLE issued command: SHOW TIME

ANR2017I Administrator SERVER_CONSOLE issued command: SHOW TIME

ANR2017I Administrator SERVER_CONSOLE issued command: SHOW DEDUPTHREAD

ANR2017I Administrator SERVER_CONSOLE issued command: SHOW TIME

ANR2017I Administrator SERVER_CONSOLE issued command: SHOW BANNER

ANR2017I Administrator SERVER_CONSOLE issued command: SHOW TIME

ANR2017I Administrator SERVER_CONSOLE issued command: SHOW RESQ

ANR2017I Administrator SERVER_CONSOLE issued command: SHOW TIME

ANR2017I Administrator SERVER_CONSOLE issued command: SHOW TXNT LOCKD=N

ANR2017I Administrator SERVER_CONSOLE issued command: SHOW TIME

ANR2034E QUERY MOUNT: No match found using this criteria.

ANR2017I Administrator SERVER_CONSOLE issued command: SHOW TIME

ANR2034E QUERY SESSION: No match found using this criteria.

ANR2017I Administrator SERVER_CONSOLE issued command: SHOW TIME

ANR2017I Administrator SERVER_CONSOLE issued command: SHOW SESS F=D

ANR2017I Administrator SERVER_CONSOLE issued command: SHOW TIME

ANR2017I Administrator SERVER_CONSOLE issued command: SHOW THREADS

ANR2017I Administrator SERVER_CONSOLE issued command: SHOW TIME

ANR0984I Process 2 for EXPIRE INVENTORY (Automatic) started in the BACKGROUND at 11:38:06 AM.

ANR0811I Inventory client file expiration started as process 2.

ANR0167I Inventory file expiration process 2 processed for 0 minutes.

ANR0812I Inventory file expiration process 2 is completed: processed 0 nodes, examined 0 objects, retained 0 objects, deleted 0 backup objects, 0 archive objects, 0 database backup volumes,

and 0 recovery plan files. 0 objects were retried 0 errors were detected, and 0 objects were skipped.

ANR0985I Process 2 for EXPIRE INVENTORY (Automatic) running in the BACKGROUND completed with completion state SUCCESS at 11:38:06 AM.

ISP01 is up and awaits commands:

ANR2017I Administrator SERVER_CONSOLE issued command: SET MINPWLENGTH 1

ANR2138I Minimum password length set to 1.

IBM Spectrum Protect:SERVER1>

reg admin isp isp

ANR2017I Administrator SERVER_CONSOLE issued command: REGISTER ADMIN isp ?***?

ANR2068I Administrator ISP registered.

IBM Spectrum Protect:SERVER1>

grant auth isp cl=sys

ANR2017I Administrator SERVER_CONSOLE issued command: GRANT AUTHORITY isp cl=sys

ANR2076I System privilege granted to administrator ISP.

When you finished your initial commands, exit the Container by pressing CTRL+D Prepare the dsm.sys and dsm.opt Files in the SPORD home directory. SPORD will link them to the correct paths inside the container.

dsm.sys:

sles-01:~ # cat /home/SPORD/ISP01/dsm.sys

servername TSMDBMGR_ISP01

commmethod tcpip

tcpserveraddr localhost

tcpport 1601

passworddir /home/SPORD/ISP01/cfg

errorlogname /home/SPORD/ISP01/cfg/tsmdbmgr.log

nodename $$_TSMDBMGR_$$

SERVERNAME isp01

TCPPORT 1601

TCPSERVERADDRESS 192.168.178.60

PASSWORDDIR /home/SPORD/ISP01/

NFSTIMEOUT 5

SERVERNAME isp01_local

TCPPORT 1601

TCPSERVERADDRESS 127.0.0.1

PASSWORDDIR /home/SPORD/ISP01/

NFSTIMEOUT 5

SERVERNAME isp01_remote

TCPPORT 1601

TCPSERVERADDRESS sles-02.fritz.box

PASSWORDDIR /home/SPORD/ISP01/

NFSTIMEOUT 5

dsm.opt:

sles-01:~ # cat /home/SPORD/ISP01/dsm.opt

SERVERNAME isp01

If you have specific adjustments for your dsmserv.opt, now is the time to edit it:

sles-01:~ # cat /home/SPORD/ISP01/cfg/dsmserv.opt

COMMMETHOD TCPIP

TCPPORT 1601

ACTIVELOGSize 2048

ACTIVELOGDirectory /home/SPORD/ISP01/act

ARCHLOGDirectory /home/SPORD/ISP01/arc

VOLUMEHISTORY /home/stgpools/ISP01/volhist.out

DEVCONF /home/stgpools/ISP01/devconf.out

ALIASHALT stoptsm

Note

For using GSCC to stop ISP later on, please add the line: “ALIASHALT stoptsm” to dsmserv.opt

Step 4: Set up GSCC configuration (Both)

Now we set up our GSCC configuration according to the GSCC part of this documentation. In our environment, these files are in our /etc/gscc folder:

sles-01:/home/SPORD/ISP01/etcgscc # ll

-rw-r--r-- 1 root root 3283 Nov 25 15:58 ISP01.def

-rw-r--r-- 1 root root 4005 Oct 8 15:18 README

-rw-r--r-- 1 root root 146 Nov 25 13:57 cl.def

-rw-r--r-- 1 root root 10 Nov 25 13:58 clipup

-rw-r--r-- 1 root root 1040 Nov 25 14:31 gscc.conf

-rw-r--r-- 1 root root 8 Oct 8 15:19 gscc.version

-rw-r--r-- 1 root root 18 Nov 25 14:33 gsuidcustom.conf

-rw-r--r-- 1 root root 15 Nov 25 14:35 heartbeat

-rw-r--r-- 1 root root 38 Nov 25 15:16 passweb

drwxr-xr-x 2 root root 4096 Nov 26 15:29 samples

drwxr-xr-x 2 root root 78 Nov 26 15:29 ui

Lets have a look at the contents of these configuration files:

To make GSCC inside containers start up as operator, please create a file SPORD.OPERATOR in the SPORD home directory:

sles-01:/home/SPORD/ISP01 # touch SPORD.OPERATOR

sles-01:/home/SPORD/ISP01 # ll

total 32

-rw-r--r-- 1 isp01 isp01 377 Nov 25 10:37 ISP01.dkd

drwxr-xr-x 3 root root 17 Nov 25 12:29 Nodes

-rw-r--r-- 1 root root 0 Nov 25 14:59 SPORD.OPERATOR

...

Then start the containers:

sles-01:/home/SPORD/ISP01 # spordadm ISP01 start

Starting the container ...

"docker ps" output:

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

737b7bc3340f dockercentos74:SP819.100-GSCC-DSMISI "/opt/generalstorage…" 2 seconds ago Up 1 second gscc-ISP01

Now we allow GSCC to communicate with ISP by executing the settsmadm method inside gsccadm:

sles-01:~ # spordadm 1

You're inside gscc-ISP01 container

ISP01@sles-01 # gsccadm

General Storage Cluster Controller for TSM - Version 3.8.5.4

Command Line Administrative Interface

(C) Copyright General Storage 2017

Please enter user id: admin

Please enter password:

GSCC Version(CLI Revision): 3.8.5.4(2398)

gscc sles-01>settsmadm

General Storage Cluster Controller for TSM - Version 3.8.5.4

TSM Admin Registration - SECURED

Please enter TSM user id: isp

Please enter TSM password:

Please enter GSCC key:

Please enter GSCC key again:

INFO: key verification OK.

INFO: Encrypting and saving input.

INFO: Completed.

Now we add an additional user for admministering GSCC itself:

sles-01:~ # spordadm 1 gsccadm

General Storage Cluster Controller for TSM - Version 3.8.5.4

Command Line Administrative Interface

(C) Copyright General Storage 2017

Please enter user id: admin

Please enter password:

GSCC Version(CLI Revision): 3.8.5.4(2398)

gscc sles-01>update admin add isp isp

INFO: User isp added !

Optionally, we can delete the default gscc “admin” user:

gscc sles-01>update admin del admin admin

INFO: User admin deleted !

gscc sles-01>quit

sles-01:~ # spordadm 1 gsccadm

General Storage Cluster Controller for TSM - Version 3.8.5.4

Command Line Administrative Interface

(C) Copyright General Storage 2017

Please enter user id: admin

Please enter password:

ERROR: Authentication Failure !

After all is configured, we can start the ISP instance via GSCCADM:

gscc sles-01>oper starttsm ISP01

INFO: Command starttsm for ISP01 listed !

Step 5: Set up DB2 HADR configuration (Primary)

Now we need to configure the systems for DB2 HADR and get HADR running.

First, connect to the primary instance container as the instance owner:

sles-01:/home/SPORD/ISP01/cfg # spordadm 1

You're inside gscc-ISP01 container

ISP01@sles-01 # su - isp01

Last login: Fri Nov 26 09:44:29 CET 2021 on pts/1

Then connect to the db and set up settings neccessary for HADR:

SPORD-isp01@sles-01 #db2 connect to tsmdb1

Database Connection Information

Database server = DB2/LINUXX8664 11.1.4.4

SQL authorization ID = ISP01

Local database alias = TSMDB1

SPORD-isp01@sles-01 #db2 GRANT USAGE ON WORKLOAD SYSDEFAULTUSERWORKLOAD TO PUBLIC

DB20000I The SQL command completed successfully.

SPORD-isp01@sles-01 #db2 update db cfg for TSMDB1 using LOGINDEXBUILD yes

DB20000I The UPDATE DATABASE CONFIGURATION command completed successfully.

SQL1363W One or more of the parameters submitted for immediate modification

were not changed dynamically. For these configuration parameters, the database

must be shutdown and reactivated before the configuration parameter changes

become effective.

SPORD-isp01@sles-01 #db2 update db cfg for TSMDB1 using HADR_LOCAL_HOST sles-01.fritz.box

DB20000I The UPDATE DATABASE CONFIGURATION command completed successfully.

SQL1363W One or more of the parameters submitted for immediate modification

were not changed dynamically. For these configuration parameters, the database

must be shutdown and reactivated before the configuration parameter changes

become effective.

SPORD-isp01@sles-01 #db2 update db cfg for TSMDB1 using HADR_LOCAL_SVC 60100

DB20000I The UPDATE DATABASE CONFIGURATION command completed successfully.

SQL1363W One or more of the parameters submitted for immediate modification

were not changed dynamically. For these configuration parameters, the database

must be shutdown and reactivated before the configuration parameter changes

become effective.

SPORD-isp01@sles-01 #db2 update db cfg for TSMDB1 using HADR_REMOTE_HOST sles-02.fritz.box

DB20000I The UPDATE DATABASE CONFIGURATION command completed successfully.

SQL1363W One or more of the parameters submitted for immediate modification

were not changed dynamically. For these configuration parameters, the database

must be shutdown and reactivated before the configuration parameter changes

become effective.

SPORD-isp01@sles-01 #db2 update db cfg for TSMDB1 using HADR_REMOTE_SVC 60100

DB20000I The UPDATE DATABASE CONFIGURATION command completed successfully.

SQL1363W One or more of the parameters submitted for immediate modification

were not changed dynamically. For these configuration parameters, the database

must be shutdown and reactivated before the configuration parameter changes

become effective.

SPORD-isp01@sles-01 #db2 update db cfg for TSMDB1 using HADR_REMOTE_INST isp01

DB20000I The UPDATE DATABASE CONFIGURATION command completed successfully.

SQL1363W One or more of the parameters submitted for immediate modification

were not changed dynamically. For these configuration parameters, the database

must be shutdown and reactivated before the configuration parameter changes

become effective.

SPORD-isp01@sles-01 #db2 update db cfg for TSMDB1 using HADR_SYNCMODE NEARSYNC

DB20000I The UPDATE DATABASE CONFIGURATION command completed successfully.

SQL1363W One or more of the parameters submitted for immediate modification

were not changed dynamically. For these configuration parameters, the database

must be shutdown and reactivated before the configuration parameter changes

become effective.

SPORD-isp01@sles-01 #db2 update db cfg for TSMDB1 using HADR_PEER_WINDOW 300

DB20000I The UPDATE DATABASE CONFIGURATION command completed successfully.

SQL1363W One or more of the parameters submitted for immediate modification

were not changed dynamically. For these configuration parameters, the database

must be shutdown and reactivated before the configuration parameter changes

become effective.

Next we need to do an initial backup and restore to the standby side. Start with the backup on the primary site:

SPORD-isp01@sles-01 #db2 backup database TSMDB1 online to /home/SPORD/ISP01/ COMPRESS include logs

Backup successful. The timestamp for this backup image is : 20211126101835

Continue with the standby system.

Step 6: Set up DB2 HADR configuration (Standby)

We did a backup of the primary sites’ DB in the last step, now we have to restore this backup to the standby site.

Copy the backup from primary to standby:

sles-01:/home/SPORD/ISP01 # scp TSMDB1.0.isp01.DBPART000.20211126101835.001 sles-02:/home/SPORD/ISP01/ Password: TSMDB1.0.isp01.DBPART000.20211126101835.001 100% 128MB 128.1MB/s 00:01

Make sure that the backup file is readable by the instance user on the standby system.

Restore the backup file:

SPORD-isp01@sles-02 #db2 restore db TSMDB1 from /home/SPORD/ISP01/ on /home/SPORD/ISP01/db1,/home/SPORD/ISP01/db2,/home/SPORD/ISP01/db3,/home/SPORD/ISP01/db4 into TSMDB1 logtarget /home/SPORD/ISP01/arc replace history file SQL2523W Warning! Restoring to an existing database that is different from the database on the backup image, but have matching names. The target database will be overwritten by the backup version. The Roll-forward recovery logs associated with the target database will be deleted. Do you want to continue ? (y/n) Y DB20000I The RESTORE DATABASE command completed successfully.

After restoring the DB, continue with configuring HADR settings:

SPORD-isp01@sles-02 #db2 update db cfg for TSMDB1 using LOGINDEXBUILD yes

DB20000I The UPDATE DATABASE CONFIGURATION command completed successfully.

SPORD-isp01@sles-02 #db2 update db cfg for TSMDB1 using HADR_LOCAL_HOST sles-02.fritz.box

DB20000I The UPDATE DATABASE CONFIGURATION command completed successfully.

SPORD-isp01@sles-02 #db2 update db cfg for TSMDB1 using HADR_LOCAL_SVC 60100

DB20000I The UPDATE DATABASE CONFIGURATION command completed successfully.

SPORD-isp01@sles-02 #db2 update db cfg for TSMDB1 using HADR_REMOTE_HOST sles-01.fritz.box

DB20000I The UPDATE DATABASE CONFIGURATION command completed successfully.

SPORD-isp01@sles-02 #db2 update db cfg for TSMDB1 using HADR_REMOTE_SVC 60100

DB20000I The UPDATE DATABASE CONFIGURATION command completed successfully.

SPORD-isp01@sles-02 #db2 update db cfg for TSMDB1 using HADR_REMOTE_INST isp01

DB20000I The UPDATE DATABASE CONFIGURATION command completed successfully.

SPORD-isp01@sles-02 #db2 update db cfg for TSMDB1 using HADR_SYNCMODE NEARSYNC

DB20000I The UPDATE DATABASE CONFIGURATION command completed successfully.

SPORD-isp01@sles-02 #db2 update db cfg for TSMDB1 using HADR_PEER_WINDOW 300

DB20000I The UPDATE DATABASE CONFIGURATION command completed successfully.

When the configuration is complete, we can start the DB as a standby HADR DB:

SPORD-isp01@sles-02 #db2 start hadr on db tsmdb1 as standby

DB20000I The START HADR ON DATABASE command completed successfully.

(Optional) if unsure if the HADR configuration is correct and the DB is up and running, check with:

SPORD-isp01@sles-01 #db2pd -hadr -db tsmdb1

Database Member 0 -- Database TSMDB1 -- Active -- Up 0 days 00:00:40 -- Date 2021-11-26-10.58.25.153490

HADR_ROLE = PRIMARY

REPLAY_TYPE = PHYSICAL

HADR_SYNCMODE = NEARSYNC

STANDBY_ID = 1

LOG_STREAM_ID = 0

HADR_STATE = PEER

HADR_FLAGS = TCP_PROTOCOL

PRIMARY_MEMBER_HOST = sles-01.fritz.box

PRIMARY_INSTANCE = isp01

PRIMARY_MEMBER = 0

STANDBY_MEMBER_HOST = sles-02.fritz.box

STANDBY_INSTANCE = isp01

STANDBY_MEMBER = 0

HADR_CONNECT_STATUS = CONNECTED

HADR_CONNECT_STATUS_TIME = 11/26/2021 10:57:48.494204 (1637920668)

HEARTBEAT_INTERVAL(seconds) = 30

HEARTBEAT_MISSED = 0

HEARTBEAT_EXPECTED = 1

HADR_TIMEOUT(seconds) = 120

TIME_SINCE_LAST_RECV(seconds) = 7

PEER_WAIT_LIMIT(seconds) = 0

LOG_HADR_WAIT_CUR(seconds) = 0.000

LOG_HADR_WAIT_RECENT_AVG(seconds) = 0.000000

LOG_HADR_WAIT_ACCUMULATED(seconds) = 0.000

LOG_HADR_WAIT_COUNT = 0

SOCK_SEND_BUF_REQUESTED,ACTUAL(bytes) = 0, 87040

SOCK_RECV_BUF_REQUESTED,ACTUAL(bytes) = 0, 376960

PRIMARY_LOG_FILE,PAGE,POS = S0000011.LOG, 0, 5921678017

STANDBY_LOG_FILE,PAGE,POS = S0000011.LOG, 0, 5921678017

HADR_LOG_GAP(bytes) = 0

STANDBY_REPLAY_LOG_FILE,PAGE,POS = S0000011.LOG, 0, 5921678017

STANDBY_RECV_REPLAY_GAP(bytes) = 0

PRIMARY_LOG_TIME = 11/26/2021 10:52:26.000000 (1637920346)

STANDBY_LOG_TIME = 11/26/2021 10:52:26.000000 (1637920346)

STANDBY_REPLAY_LOG_TIME = 11/26/2021 10:52:26.000000 (1637920346)

STANDBY_RECV_BUF_SIZE(pages) = 4096

STANDBY_RECV_BUF_PERCENT = 0

STANDBY_SPOOL_LIMIT(pages) = 524288

STANDBY_SPOOL_PERCENT = 0

STANDBY_ERROR_TIME = NULL

PEER_WINDOW(seconds) = 300

PEER_WINDOW_END = 11/26/2021 11:03:20.000000 (1637921000)

READS_ON_STANDBY_ENABLED = N

Step 7: Configure file sync for GSCC (Standby)

Configure your ISP instance dsmserv.opt to specify VOLUMEHISTORY and DEVCONF, if not already done. Then start a db backup to check if the configuration is correct:

Protect: ISP01>setopt VOLUMEHISTORY /home/stgpools/ISP01/volhist.out

Do you wish to proceed? (Yes (Y)/No (N)) y

ANR2119I The VOLUMEHISTORY option has been changed in the options file.

Protect: ISP01>backup db t=f devcl=ispdbb

ANR2280I Full database backup started as process 3.

ANS8003I Process number 3 started.

Protect: ISP01>setopt DEVCONF /home/stgpools/ISP01/devconf.out

Do you wish to proceed? (Yes (Y)/No (N)) y

ANR2119I The DEVCONF option has been changed in the options file.

Protect: ISP01>q pr

ANR0944E QUERY PROCESS: No active processes found.

ANS8001I Return code 11.

Protect: ISP01>q volhist t=dbb

Date/Time: 11/26/21 11:54:36

Volume Type: BACKUPFULL

Backup Series: 1

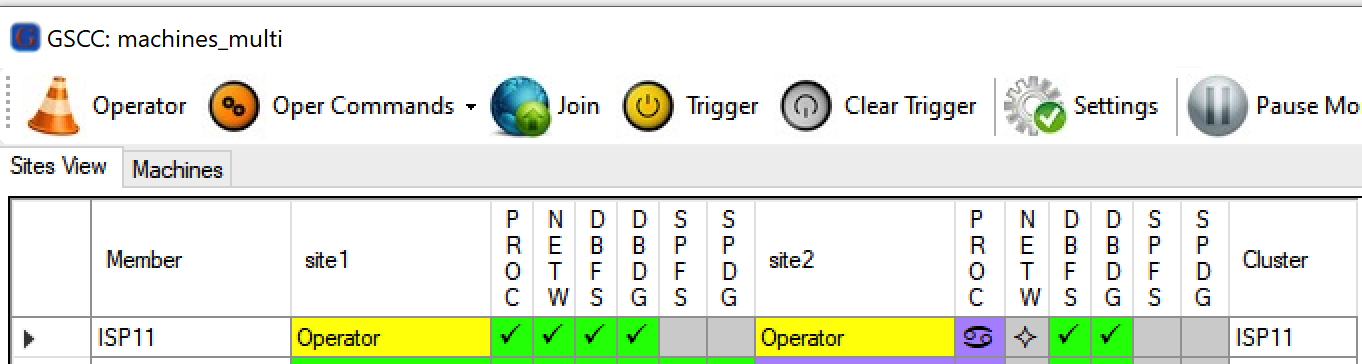

Backup Operation: 0